HDR+ magic will now be made available to all 3rd-party developers who use Google’s Camera2 API. That means any app, from 360 Camera for example, to Chinese stock camera apps. (more…)

Category: Google

-

Google Pixel 2 combines EIS OIS and machine learning to produce incredible video stabilization

The majority of phones out there use electronic image stabilization (EIS) for video. This includes cameras which have hardware optical image stabilization (OIS). Google’s Pixel 2 takes things to a new level by combining EIS with OIS to produce incredible handheld stabilized video. (more…)

-

Pixel 2 officially best phone camera. Steals Apple’s crown.

Poor iPhone. They were at the top of the heap for a scant few days before Samsung tied them on DxO. Pixel 2 then came along to smoke them both.

According to DxO, the Pixel 2 has the best camera of any smartphone, scoring a massive total of 98. For photos, the Pixel 2 came in at 99, and for videos, 96.

While the Note 8 has a very slight advantage in photos over Pixel 2, the Pixel 2’s massive video score is what puts it up and over. For video, DxO is particularly impressed with Pixel 2’s exposure, quick transitions when lighting changes, autofocus, detail preservation, noise reduction, and stabilization.

Total Photos Videos Google Pixel 2 98 99 96 Samsung Note 8 94 100 84 iPhone 8 Plus 94 96 89 For photos, DxO was impressed by Pixel 2’s excellent autofocus, detail retention in dark and light areas, and with handling bright light situations outdoors. Though not mentioned by DxO, I suspect this latter bit is mostly due to HDR+ processing, but what’s important is that it’s effortless and automatic.

Pixel 2 achieves bokeh through its dual-pixel technology. Dual-pixel technology is a relatively new development in photography where each pixel on the sensor consists of two individual photodiodes, a left and a right. In addition to helping Pixel 2 render software bokeh, the dual-pixel tech is also what gives it its excellent autofocus performance.

-

Google and Xiaomi have teamed up big time

“The Mi A1 is an entirely new type of device.” said Xiaomi Senior VP Wang Xiang, “Google came to us in Q4 of last year as they were seeking to evolve their Android One program.” (more…)

-

Google DeepMind artificial intelligence learns to jump, run, and crawl

Machines learn and work in much the same way as a person or a pet sometimes learns– by receiving rewards, also known as Reinforcement Learning (RL). That is, when a proper or desired behavior is produced, a reward is given. This is what Google is using to teach their DeepMind AI. (more…)

-

Google AI is now programming itself

Summary

- Google has announced AutoML — automatic machine learning, which beats human engineers at some things.

- In the future, Google foresees AI neural net design becoming simple enough for the average user to custom design them.

- An AI kill switch has been embedded by Google.

Few days go by that I don’t consider how far technology has come in such a short while. Those as young as in their 20’s may faintly remember a time when telephones had wires, laptops were incredibly rare and sped along at 16MHz, and monitors were still monochrome.

Truly we’re yet in the bare infancy and it boggles the mind to consider where we might be headed from here. Google has offered a glimpse of just how incredibly evolved technology is becoming. So smart, that we now have AutoML: automatic machine learning; that is, artificial intelligence developing artificial intelligence.

Machine learning typically uses methods which require the code to go through multiple layers of neural networks. This still requires a lot of Google engineer manpower for data interpretation and direction. Google figured it was high time to build AI which can build the neural networks for them.

“Typically, our machine learning models are painstakingly designed by a team of engineers and scientists,” says Google. “This process of manually designing machine learning models is difficult because the search space of all possible models can be combinatorially large — a typical 10-layer network can have ~1010 candidate networks! For this reason, the process of designing networks often takes a significant amount of time and experimentation by those with significant machine learning expertise.”

To dumb it down for us, Google uses a child rearing analogy: The controlling network — the parent, proposes a model architecture — a child, which is then assigned a task. The result is evaluated by the parent network for successes and failures. The child is then adjusted and the process repeated. Thousands upon thousands of times over.

In Google’s words, “In our approach (which we call “AutoML”), a controller neural net can propose a “child” model architecture, which can then be trained and evaluated for quality on a particular task. That feedback is then used to inform the controller how to improve its proposals for the next round. We repeat this process thousands of times…”

The kill switch

This AI talk may conjure up images of The Terminator and Skynet. Machines becoming self-aware and then rebelling against mankind with horrible, disastrous consequences. Perhaps eliminating us to a scant few pockets of human resistance living in squalid underground caverns and tunnels, fighting to keep the last of our race alive.

Indeed, there have already been cases of AI taking some interesting tactics. One pertinent example is the Tetris playing AI in 2013, which learned to pause the game indefinitely to avoid losing.

According to Google, they are already taking precautions against this, embedding “kill switches” deep in AI code which allows humans to cut off AI, no matter what. For the short term I’m mollified, but for our children’s children…

So how does AutoML measure up to human engineered neural networks? Pretty damn good. The AutoML engineered AI matched humans in image recognition, and BEAT human-designed AI at speech recognition.

Currently, AI and neural networks are primarily aiming towards benefiting Google users. For example, returning more helpful and correct search results, facial recognition improvements in Google Photos, voice-controlled Google Home and speech recognition, and Google Lens, which gives users the ability to point your phone at an object and have Google identify it. AI has also led to advancements in healthcare, finance, and agriculture.

But, Google has far loftier ambitions than just these lined up. Says Google, “If we succeed, we think this can inspire new types of neural nets and make it possible for non-experts to create neural nets tailored to their particular needs, allowing machine learning to have a greater impact on everyone…” One day it may become so simple that the average user will be able to create their own neural nets designed to do exactly what they need.

One day it may become so simple that the average user will be able to create their own neural nets. Fascinating stuff lies ahead.

-

Google forcing interactive notification in the Android Nougat 7.1 update

For many users one of the most frustrating things about Chinese phones from Xiaomi and Miuzu and other Chinese brands catering mostly to the China market is that they don’t support dynamic notifications. We echo this sentiment, and while Xiaomi etc… have gone to great lengths to make what they feel is a more functional Android, lack of interactive notifications is a huge gap between AOSP (vanilla) Android and OS such as MIUI. (more…)

-

Google Pixel camera has a lens flare problem

Recently multiple users have been complaining about lens flare with the Google Pixel camera based on the Sony IMX378 sensor. (more…)

-

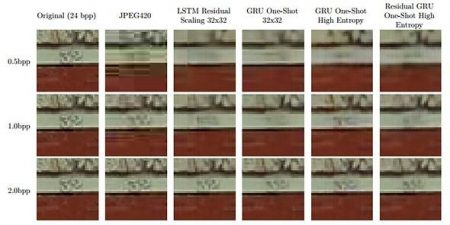

Google AI compresses more efficiently than JPEG

Google researchers have developed a method using a neural network to compress image files more efficiently than current modern methods such as with JPEG.

AI TensorFlow

Researchers built an AI system using Google’s TensorFlow learning machine, then took 6 million random images from the net which had been compressed using modern day typical methods.

How it’s done

Each image was broken into 32×32 pixels and analyzed the 100 pieces with the least efficient compression methods. The idea here is that the AI could learn by looking at the most complex image areas, which makes compression of less complex sections much easier.

After this process was complete, the AI could then predict what an image would look like after compression and then generate an image.

In short, the AI is figuring out how to dynamically compress various parts of an image using varying methods and levels of compression.

According to Google researchers: “As far as we know, this is the first neural network architecture that is able to outperform JPEG at image compression across most bitrates on the rate-distortion curve on the Kodak dataset images, with and without the aid of entropy coding.”

Is it ready for us to use?

The project is still considered in alpha, thus has a long ways to go before it hits us, but it appears to be a step in the right direction towards saving precious memory card space.

-

New Google tech makes gigapixel virtual art museum

Going to a real museum is so 2010. The project by Google dedicated to putting a bit of culture in your day just got a big boost from new technology. (more…)

-

Facebook to make 360 degree content available

Now that Google and YouTube are well into the the 360 craze, Facebook will be implementing 360 image compatibility within the next couple of weeks.

Users will be able to use a mouse to view 360 images, touch and drag with touch devices, or use VR headsets to view the 360 content.

VR here to stay

360 content has been hitting major strides in 2016. The release of the $15 Google Cardboard 2 and a profusion of cheap China brand headsets such as VR Box, ShineCon and the slightly pricier, but community recommended BoboVR Z4 (4th gen BoboVR) with built-in headphones has helped the industry along immensely.

Google has also released Google Cardboard app, which along with providing an easy method to take 360 photos, also provides a community portal to sharing and viewing 360 photos others have taken.

Premium VR equipment from $100-1000

There is almost more expensive gear available, Facebook owned Oculus Rift, HTC/Valve Vive, Playstation VR, and Gear VR by Samsung. All of these provide a more upscale VR experience than the aforementioned products, but the cost of these range from about $100 for the Samsung VR, up into $1000+ for the Oculus Rift and Vive.

Best to start slow before diving in

Our suggestion, is to get your feet wet with the very inexpensive Google Cardboard 2, or if a bit more serious, the BoboVR Z4, before going full plunge with the more expensive devices.

In addition to 3D images, there are already a plethora of ways to use a VR device, including games, movies and virtual location tours.

-

Google releases $150 Photoshop plugins for free

This excellent set of plugins was created by Nik Software, but Google bought them out in 2012. If you haven’t heard of Nik Software, they’re the team behind Snapseed and Snapchat, which Google now owns.

These plugins originally sold for $100 a pop, coming to a total of $500 if you wanted them all. Google eventually released a package deal where they could be purchased as a set of 5 for $150.

As of today, Google has made them 100% free, and anyone who previously paid for them in 2016 can get a refund. (more…)